Keyword [xUnit] [Activation Unit] [GCN]

Kligvasser I, Rott Shaham T, Michaeli T. xUnit: learning a spatial activation function for efficient image restoration[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 2433-2442.

1. Overview

目前

- 0% parameters in Activation unit (nonlinearities), Conv (spatial processing)

- Network deeper and deeper

因此,论文提出xUnit结构

- learnable nonlinear function with spatial connection

- reduce layer

- used in low-level task (denoising, de-raining, super resolution)

1.1. Related Work

- ELUs

- SRCNN

- VDSR

- SRResNet

- EDSR

- ESPCN

- Binarized Neural Networks

- Deep Detail Network

- DehazeNet

- MobileNet

1.2. 模型

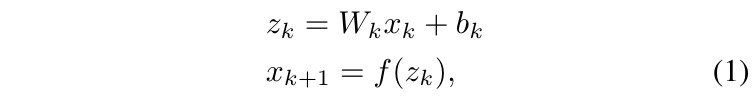

Conv+Activation形式如下:

1.2.1. ReLU

- o表示点乘,定义0/0=0.

1.2.2. xUnit

H_k为depth-wise convolution.

对于d-channel输入,d-channel输出而言,计算复杂度为

- 标准Conv. rxrxdxd

- depth-wise Conv. rxrxd

2. Experiments

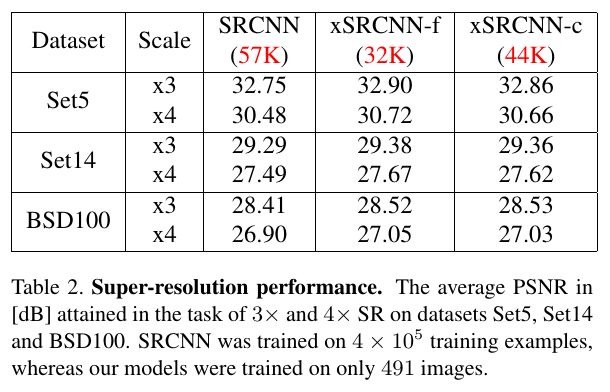

2.1. Compare

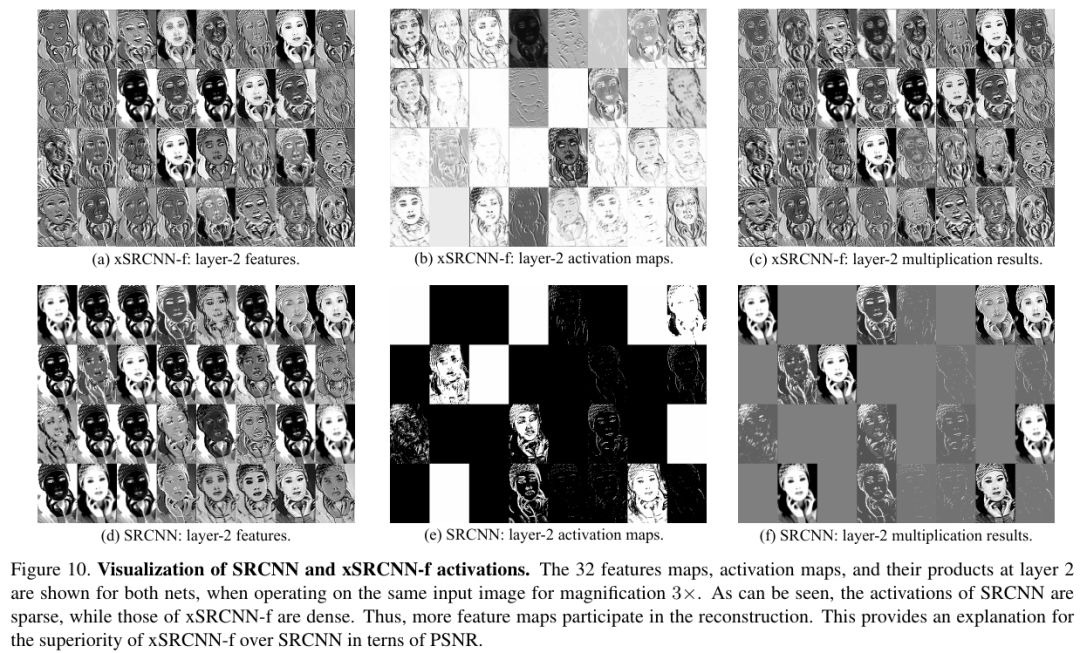

2.2. 特征图可视化

ReLU丢弃了大部分特征图(黑色),而xUnit大部分特征图都参与后续计算(白色)。

2.3. Denoising

2.4. De-raining

(PSNR) 28.94 VS 31.17.

2.5. Super Resolution